In the last edition of this newsletter, I shared some of my thinking about A.I. in Education today that was spurred by my recent conference that I attended. Since then, I presented at another conference, and part of that was a panel discussion I was part of about A.I. in education. This topic is everywhere for me right now!

At the end of the last edition, I shared a link to a survey that asked you all a few questions about A.I., and whether you’d like to hear more about it. Thanks to the many of you who chimed in! There was a lot of support for the idea that you’d like to learn more. So I think we’ll play around with A.I. in education for the next few posts to see what we can see about this topic.

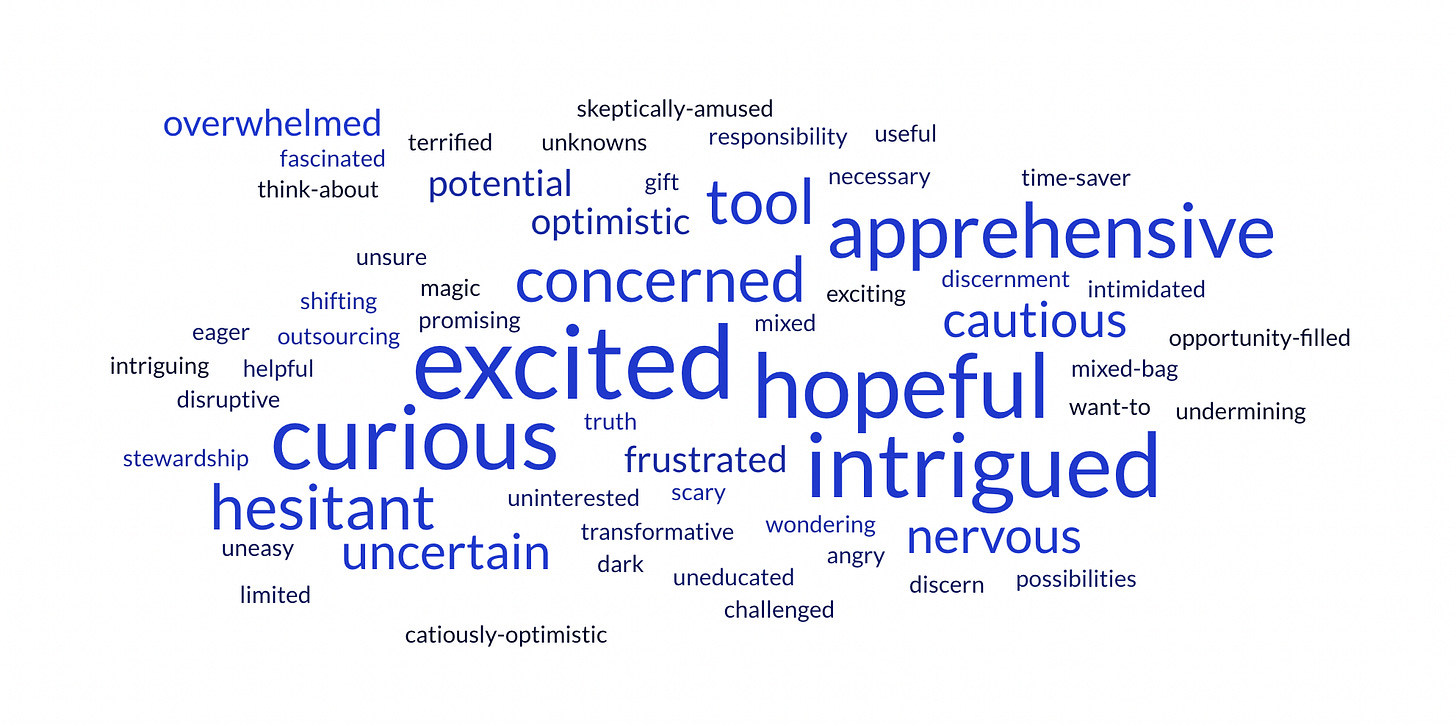

First off, let me share this: I asked you all to give three words you would use to describe how you are feeling about A.I. in education. Here’s a word cloud of the results, crowd-sourced from you, dear readers:

I should say that none of these results surprised me. It was interesting to see that the most-common descriptors are “excited,” “hopeful,” “curious,” and “intrigued.” Right behind these more positive words are “apprehensive,” “concerned,” “hesitant,” and “cautious.” I think that is a mark of wisdom. We can be both excited and apprehensive. It makes sense to be both hopeful and concerned. Curious and hesitant is a perfectly reasonable pair. So is intrigued and cautious. What I’m taking away from this is that it’s worth us thinking together about both the potential-good and potential-problems for A.I. in education.

In my survey I also asked about policies your schools have for student and teacher uses of A.I. for their work. Here were the results from the folks who answered those questions:

I’m pretty intrigued by these results, and I’m now wondering why schools might have different expectations for their students’ use of A.I. and teachers’ use of A.I. I am making a pretty bold assumption here that most teachers and schools are thinking about A.I. chatbots (like ChatGPT or Gemini) and their text-based outputs, rather than A.I. tools that produce images, audio, video, or other forms of media. But it is interesting to note that these results indicate schools are more concerned about how students are using A.I. than how the teachers are using these powerful tools.

Admittedly, I think I’m more concerned about students too, because I don’t think they have developed the cognitive capacities to be good judges of the output from an A.I. chatbot. One of the things I said in the panel discussion at that conference was how much I think we need to emphasize teaching both basic skills and knowledge as well as critical thinking skills. In an A.I.-rich world…how else will we be able to judge the outputs?

This has been a shift of thinking for me, honestly. There was a time in my teaching career when I know I actually said aloud: “If the students can google the answer to the question in 20 seconds or less, do they really need to memorize it?” I fully recant that idea today. Students NEED basic knowledge and understanding, and I believe we are doing them a disservice in both the short and long term if they are not equipped to have foundational knowledge from a wide range of fields, so they can quickly and carefully judge the trustworthiness of A.I.-generated outputs.

This idea connects with the comments some of you left in response to my survey about the things you’d like to learn about A.I., which included:

“I would love to learn how we can best teach our students to use AI well. How can we help them discern the best ways to use AI and the best times to put it aside.”

“How to have policies that allow some use of AI tools... How to help students not to depend on it, to get dumber in the process...”

“How to teach students to use it as a tool.. not as a way to "cheat" or get quick answers without the learning.”

“Where's the line between being a responsible user and being lazy? ;) How do you teach teachers and students to use it responsibly? I love the tools but I am getting a little uneasy with how easy it is. Reflection needs to be a part of the use.”

I think these are fabulous wonderings, friends. These are things we definitely need to grapple with! We’ll get into this more deeply in future editions, but for now, let me give three words of encouragement:

We have to keep this in mind: our students—and we too, as teachers—are created in God’s image. God is the Creator. (Period. Full stop.) But…being created in His image means that we too are creators (small “c” creators, mind you.) We have been created to create. And while generative A.I., like these powerful chatbots that have become so prevalent, seem to be creating things, let’s remember that they are not really creating. They are cobbling things together based on the databases that were used to train them. The “thinking” an A.I. chatbot does is not human thinking. It is a word-association game, predicting what the user is hoping to see, rather than actually creating a novel idea. When and if we have our students use A.I.-powered tools, we have to help them understand their role in the work as the creators, rather than relegating that to the A.I., which is really just a constructor, rather than creator.

I think an ethical approach to using A.I. as an educator means helping students view the tools they are using as tools. Admittedly, these are potentially POWERFUL tools, so in the same way you wouldn’t let students use actual power tools (saws and drills and nail guns) without some instruction, supervision, and feedback, students need that kind of careful encouragement here too. We use different tools to support learning, and we use them in different ways. A.I-powered tools are almost certainly here to stay, so let’s work to equip students with the knowledge they need to use the tools well. And by “use the tools well” I mean let’s help them learn how to do human work as human beings, and use the tools to help them do human work, rather than replace human work. If they aren’t actually learning…we probably aren’t actually teaching them.

If our whole approach towards students’ use of A.I. is to try and prevent cheating, we are going to transform from teachers into police. If you want to be cop, go ahead and be a cop…but I got into this profession because I want to teach. I’m not naive, and I hope you aren’t either: students do cheat sometimes, unfortunately. This was true before ChatGPT, and it is true today. So please hear me in this: I am NOT suggesting that we shouldn’t care about students who might be tempted to cheat on their work by using A.I. Rather, I think we should approach this situation as teachers, and not as cops. Let’s teach them about what it means to be human. Let’s teach them about the ways we can use powerful tools to support our work and learning. Let’s teach them to be ethical, thoughtful, conscientious human beings. This might mean discipline (or how about discipling?) if they are using tools inappropriately…but it might also mean carefully considering the kind of work we’re assigning to the students. If they are tempted to short-circuit their own learning by not doing the real work for themselves…well, are there different things we might do to make the work more meaningful and accessible to them?

Welcome to this brave new world, friends. I believe A.I. is here to stay. There are challenges coming, to be sure. But I think there is also a lot of potential for us to explore. In coming editions, we’ll do just that!

Do you have more questions, or ideas you’d like to share? Please hit that comment button and let us know what you’re thinking about!

Some A.I. Resources

Here are three things you might find helpful for your considerations about artificial intelligence and education:

The Problem with Chatbot Personas by Dr. Derek Schuurman. Derek is a friend of mine who teaches computer science at Calvin University and has a background in machine learning. In this piece he shares about an A.I. chatbot he trained to respond in the persona of C.S. Lewis. It’s a helpful, thoughtful article for considering what happens when we start to treat machines like people.

The 3 AI Use Cases: Gods, Interns, and Cogs by Drew Breuning. Breuning is a technology researcher and writer, and in this piece he describes three ways people think about the role of A.I. in society, and why we (probably) don’t need to worry about sci-fi-style machines taking over the world…but why we also should probably be more critical about the way we actually use A.I.

Our latest episode of Hallway Conversations—What Should We Do about AI in Education? (or, Matt has a lot of questions, and Abby and Dave have ideas)—might also be helpful and encouraging for you. In it we try to demystify A.I. a bit, and share some ideas for how teachers could try some things to learn how A.I. might help their own work.

Dave’s Faves

Here are three things I’m absolutely loving right now that I hope you might love too…

Dave’s Fave #1: Video recaps (that include me!)

Here’s a video recap of ACSI’s recent Flourishing Schools Institute, where I had the privilege of speaking. It was a great conference!

Dave’s Fave #2: Reflections on learning

I got to hang out with my pal, Dr. Jared Pyles of the Center for Teaching and Learning at Cedarville University, at AECT in October. (Dr. Pyles is also a Boise State alum—go Broncos!) He came to my session about my approach for teaching “professional language” (a.k.a.vocabulary) in Introduction to Education—I use podcasts and low-stakes online quizzes. He wrote up a reflection about the session afterward, and posted it on their blog. You can read it here: 2024 AECT Reflection – Jargon, Memorization, and Understanding.

Dave’s Fave #3: Spotify’s recommended playlists

I love that Spotify recommends music for me. (Speaking of A.I., here is one more use—recommendation engines!) The data they collect generally allows them to recommend things that I like, which is maybe a little creepy, but not icky, I guess? Last week I was recommended a playlist called “Sweater Weather Instrumentals,” and I found myself listening to it all week long. So I guess I’ll recommend it to you all as well, friends. If you’re in need of some background music that feels like autumn, give this one a whirl.

The Last Word!

While it’s certainly possible to overthink things (I am an expert at this, friends), I still think that thinking is a good thing. :-) I love this quote from the brilliant and famous Nobel-prizewinning biochemist, Linus Pauling, who certainly had plenty of good ideas throughout his long and illustrious career.

I hope you’ll agree with me that thinking is a good thing, and I hope that this week’s newsletter encourages you to keep thinking about A.I. Have some conversations with colleagues, or drop your comment in response to this post below, and let’s keep the conversation going!